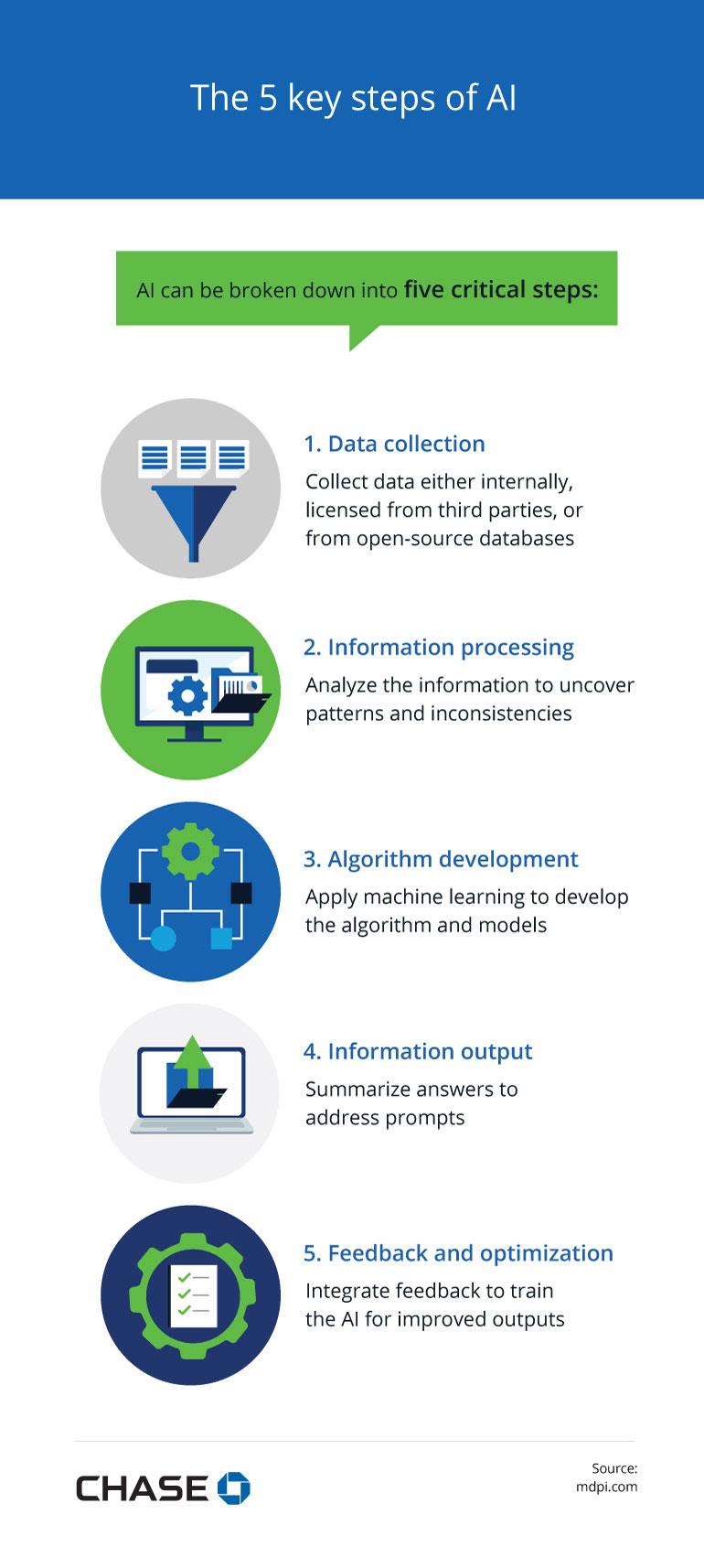

The shop lights come on, the inbox fills, and the to‑do list grows faster than the day. Somewhere between inventory, invoices, and customer questions, you keep hearing that AI could help. But should it? And where would you even begin? This guide is a practical map for small businesses considering AI-not a sales pitch, not a technical lecture. We’ll clarify what AI is and isn’t, where it can realistically support your operations, and what it demands in return: data quality, time, budget, and change management. You’ll find ways to start small, evaluate tools and vendors, set guardrails for privacy and fairness, and measure whether the effort pays off. Think of AI as another tool on your workbench. Used with intent, it can save time and sharpen decisions; used without a plan, it can add noise and cost. Before you start, it helps to know the terrain. Let’s chart it, step by step.

Identify high impact use cases with clear success metrics and manageable risk

Start where friction is highest and impact is visible. Look for repetitive, time‑sensitive tasks that touch revenue, cost, or customer experience, and where a small boost compounds quickly-think inbox triage, invoice review, or FAQ replies at peak hours. Favor jobs with clean, accessible data and a small blast radius if something goes wrong. Treat each candidate like a mini‑investment: what outcome improves, how fast can you test it, and who owns the result?

- Value lever: Money in, cost out, or risk down?

- Frequency x pain: How often and how annoying is it today?

- Data readiness: Is the information complete, legal to use, and structured?

- Human-in-the-loop: Can a person review or override early runs?

- Blast radius: If it fails, who’s affected and how badly?

- Owner + runway: A clear DRI, a 2-6 week pilot window, and budget guardrails.

- Metric + baseline: One primary KPI and a measured “before” state.

Define success like a contract: one primary metric (e.g., first response time) and up to two guardrails (e.g., accuracy ≥ 95%, no PII leaks). Keep the pilot narrow-single team, limited data scope, staged rollout-and compare to a baseline or control period. If the metric clears your threshold within the time box, scale; if not, document the learning and sunset fast. Speed matters, but so does traceability: log prompts, decisions, and outcomes so you can explain wins and unwind misses.

| Use Case | Primary Metric | Guardrail | 30‑Day Target |

|---|---|---|---|

| AI FAQ Assistant | First response time | Answer accuracy ≥ 95% | −60% |

| Email Draft Helper | Time to first draft | Tone match ≥ 90% | −50% |

| Invoice Anomaly Flag | Detected errors | False positives ≤ 10% | +40% |

| Lead Scoring | Lead-to-oppty rate | No biased features | +15% |

Build a data and security foundation with governance, privacy, and access controls

AI becomes usable only when your information is trustworthy and your guardrails are intentional. Start by mapping where data originates, how it’s transformed, and who touches it; then attach clear classifications (public, internal, confidential) that drive policy. Bake privacy into design with data minimization, explicit consent, and documented retention windows. Enforce least privilege access through groups, not individuals, and require MFA everywhere. Keep secrets in a vault, encrypt data in transit and at rest, and separate development from production. Treat models and prompts as part of your data estate: log access, version datasets, and decide upfront which fields are never allowed to reach an AI system.

- Catalog your sources and owners; label sensitive fields (PII, PHI, PCI).

- Gate training data with an approval workflow and keep a data lineage record.

- Apply DLP and masking/redaction for prompts, files, and chat logs.

- Run quarterly access reviews; automate joiner-mover-leaver processes.

- Draft an incident runbook with legal, customer notice, and rollback steps.

| Control | Why it matters | Starter move |

|---|---|---|

| IAM + MFA | Stops credential sprawl | Group-based roles, SSO, MFA by default |

| Classification | Right rules, right data | Tags: Public/Internal/Confidential |

| Encryption | Protects at rest/in transit | Managed keys; TLS everywhere |

| Audit Logging | Trace who did what | Centralize logs; immutable storage |

| DPA & Vendor Checks | Limits third‑party risk | Data maps; SOC 2/GDPR review |

| Backups & DR | Resilience under failure | Test restores; set RPO/RTO |

Keep the posture simple and repeatable: default‑deny access, private networking where possible, and explicit scopes for every integration. Train staff on privacy by default, rotate keys, and expire tokens quickly. For regulated data, lock down model inputs with allowlists and escrow sensitive analytics in a sandbox. Define measurable health checks-percent of sources cataloged, access review closure time, restore test success rate-so you know the foundation holds as you scale from pilot to production. This structure doesn’t slow innovation; it makes fast experimentation safe, reversible, and auditable.

Plan the economics, tooling choices, total cost, and realistic ROI milestones

Start with the baseline. Quantify current costs and time spent on the target workflow, then model a lean case that includes best/worst scenarios. Build a total cost of ownership view across one-time and recurring items, and pick the smallest tooling stack that meets today’s need with a path to grow. Favor contracts that allow month-to-month exit and monitor usage-based fees to avoid surprise bills. Keep data security and compliance in scope early; retrofitting controls is more expensive than planning them.

- One-time: discovery, data cleanup, prompt/playbook design, integration work, training, change management

- Recurring: model/API usage, orchestration tools, vector DB/storage, monitoring, human review, support

- Hidden: drift mitigation, re-prompting/retraining, vendor price changes, incident response

| Approach | Startup | Monthly | Time-to-Value | Lock-in |

|---|---|---|---|---|

| SaaS add-on | Low | Low-Med | 1-2 weeks | High |

| No-code workflow | Low | Med | 2-4 weeks | Med |

| API integration | Med | Usage-based | 4-8 weeks | Low-Med |

| Open-source + fine-tune | Med-High | Low-Med | 6-10 weeks | Low |

Stage your returns. Define measurable checkpoints and gate each phase with go/no-go criteria that protect cash. Track value in simple terms-hours saved, cost per task, error rate, and revenue lift-and tie them to the original baseline. ROI is earned, not assumed: insist that each milestone pays for the next round of investment, and keep a sunset plan if benefits stall.

- Week 1-2: smoke test; ≥80% task eligibility; no security blockers

- Week 3-6: pilot; ≥20% cycle-time reduction; error rate ≤ baseline

- Month 2-3: expand; cost per task down ≥30%; NPS/CSAT stable or better

- Month 4-6: break-even on total spend; documented playbooks; alerting in place

- Month 6+: scale if unit economics remain favorable; otherwise pause or pivot

Select and test vendors and models with a pilot checklist, guardrails, and an exit strategy

Treat selection as an experiment, not a commitment. Shortlist two to three providers and run a time‑boxed pilot that mirrors your real workflows. Use a simple checklist to keep decisions evidence‑based:

- Business fit: Clear use cases, domain adaptation, reference customers in your size/industry.

- Data handling: PII controls, regional hosting, retention off by default, SOC 2/ISO attestations.

- Quality: Your own eval set, reproducible scores, latency/error SLOs, transparent versioning.

- Cost clarity: Token and storage pricing, egress fees, alerts and hard caps, forecast vs. actuals.

- Support & roadmap: SLA, incident comms, deprecation policy, fine‑tuning or tool-use options.

| Option | Best for | Guardrail help | Exit path |

|---|---|---|---|

| Hosted general API | Fast start | Built‑in filters | Export prompts/logs |

| Managed open model | Control + cost | Custom policies | Self‑host same model |

| Vertical specialist | Domain accuracy | Templates + audits | Data portability |

Build guardrails into the pilot so scale doesn’t magnify risk. Keep humans in the loop for high‑impact steps, enforce least‑privilege access, and log everything for traceability. Stress‑test with adversarial prompts and edge cases, then set operational fences before production:

- Safety: Prompt hygiene, content filters, jailbreak tests, bias reviews.

- Reliability: Timeouts, retries, fallbacks, version pins, rollback plan.

- Cost control: Budgets, rate limits, max tokens, per‑team quotas.

- Observability: Prompt/output/cost telemetry, drift detection, feedback loops.

- Exit strategy: Termination clause, data deletion certificate, portability of prompts/evals, abstraction layer to swap models, parallel vendor ready, and a 30‑day rollback runbook.

In Retrospect

If AI feels less like a silver bullet and more like a new set of tools in an unfamiliar workshop, that’s about right. The point isn’t to chase every shiny object; it’s to pick the right instrument for a well-defined job, with your eyes open to costs, risks, and maintenance. Use this guide as your safety goggles and checklist. Start by clarifying the business outcome, auditing your data, and choosing a small, reversible problem to pilot. Measure what matters, mind the legal and ethical guardrails, and prepare your team for new workflows before you scale. You don’t have to become “an AI company” to benefit; you have to be a disciplined business that uses AI where it makes sense. If the best move today is “not yet,” that’s still progress-now you know why, and what would need to change. When you are ready, place one modest bet, time-box it, document what you learn, and let evidence-not enthusiasm-decide the next step. Think of AI less as a revolution and more as a renovation: planned, staged, and inspected as you go. Done that way, it doesn’t just add features; it builds capability.